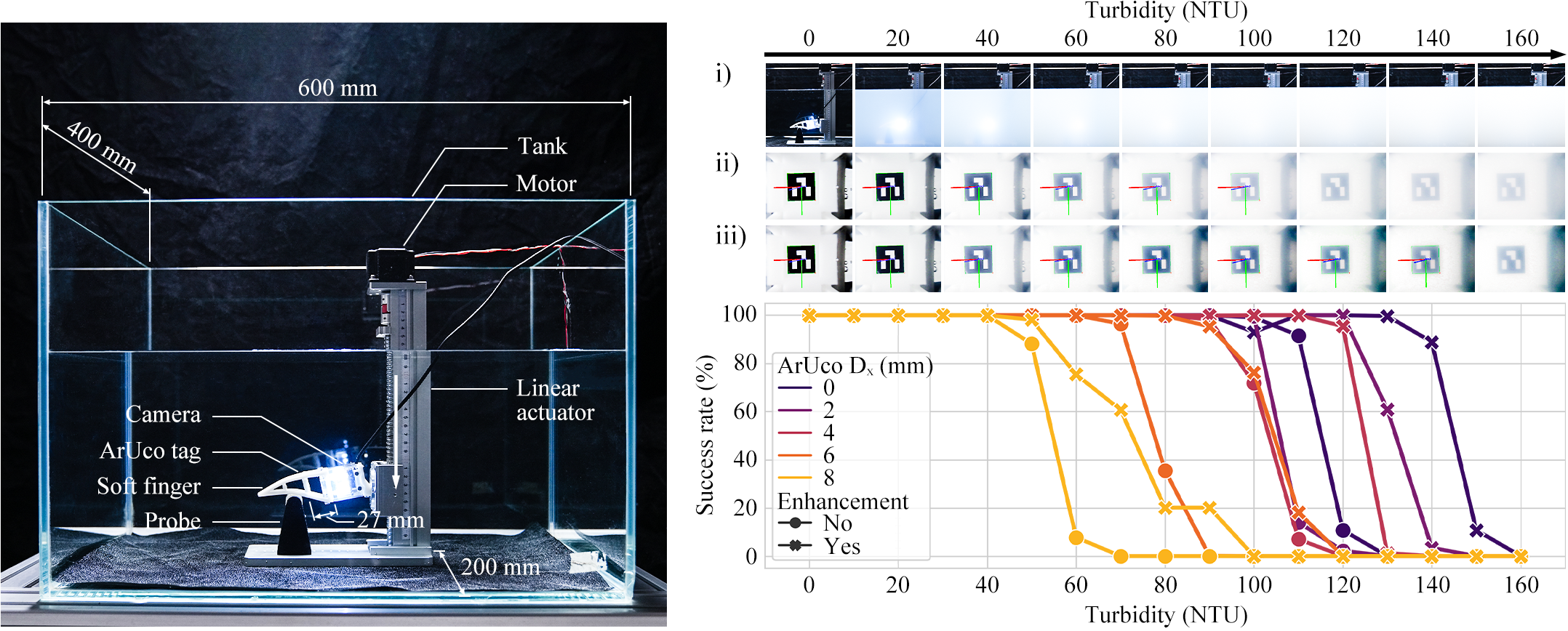

Our proposed rigidity-aware AMH method effectively transforms the visual perception process for deformable

shape reconstruction into a marker-based pose recognition problem. Therefore, the benchmarking of our

vision-based tactile sensing solution underwater is directly determined by successfully recognizing the

fiducial marker poses used in our system under different turbidity conditions. Turbidity is an optical

characteristic that measures the clarity of a water body and is reported in Nephelometric Turbidity Units

(NTU). It influences the visibility of optical cameras for underwater inspection, inducing light

attenuation effects caused by the suspended particles. As one of the critical indicators for

characterizing water quality, there have been rich studies on the turbidity of large water bodies

worldwide. For example, previous research shows that the Yangtze River's turbidity is measured between

1.71 and 154 NTU.

We investigated the robustness of our proposed VBTS solution in different water clarity conditions by

mixing condensed standard turbidity liquid with clear water to reach different turbidity ratings. Our

proposed soft robotic finger is installed on a linear actuator in a tank filled with 56 liters of clear

water. A probe is fixed under the soft robotic finger, inducing contact-based whole-body deformation when

the finger is commanded to move downward. In our experiment, for the turbidity range between 0 and 40 NTU,

the raw images captured by our in-finger vision achieved a 100\% success rate in ArUco pose recognition.

At 50 NTU turbidity, the first sign of failed marker pose recognition was observed when the most

considerable deformation was induced at 8 mm. Our experiment shows that this issue can be alleviated using

simple image enhancement techniques to regain a 100% marker pose recognition success rate. However, the

marker pose recognition performance under large-scale whole-body deformation quickly deteriorated when the

turbidity reached 60 NTU and eventually became unusable at 70 NTU. Image enhancement could effectively

increase the upper bound to 100 NTU to reach an utterly unusable marker pose recognition in large-scale

whole-body deformation. For turbidity above 100 NTU, simple image enhancement provides limited

contributions to our system. Our experiment shows that when the turbidity reached 160 NTU, our in-finger

system failed to recognize any ArUco pose underwater, even after image enhancement.

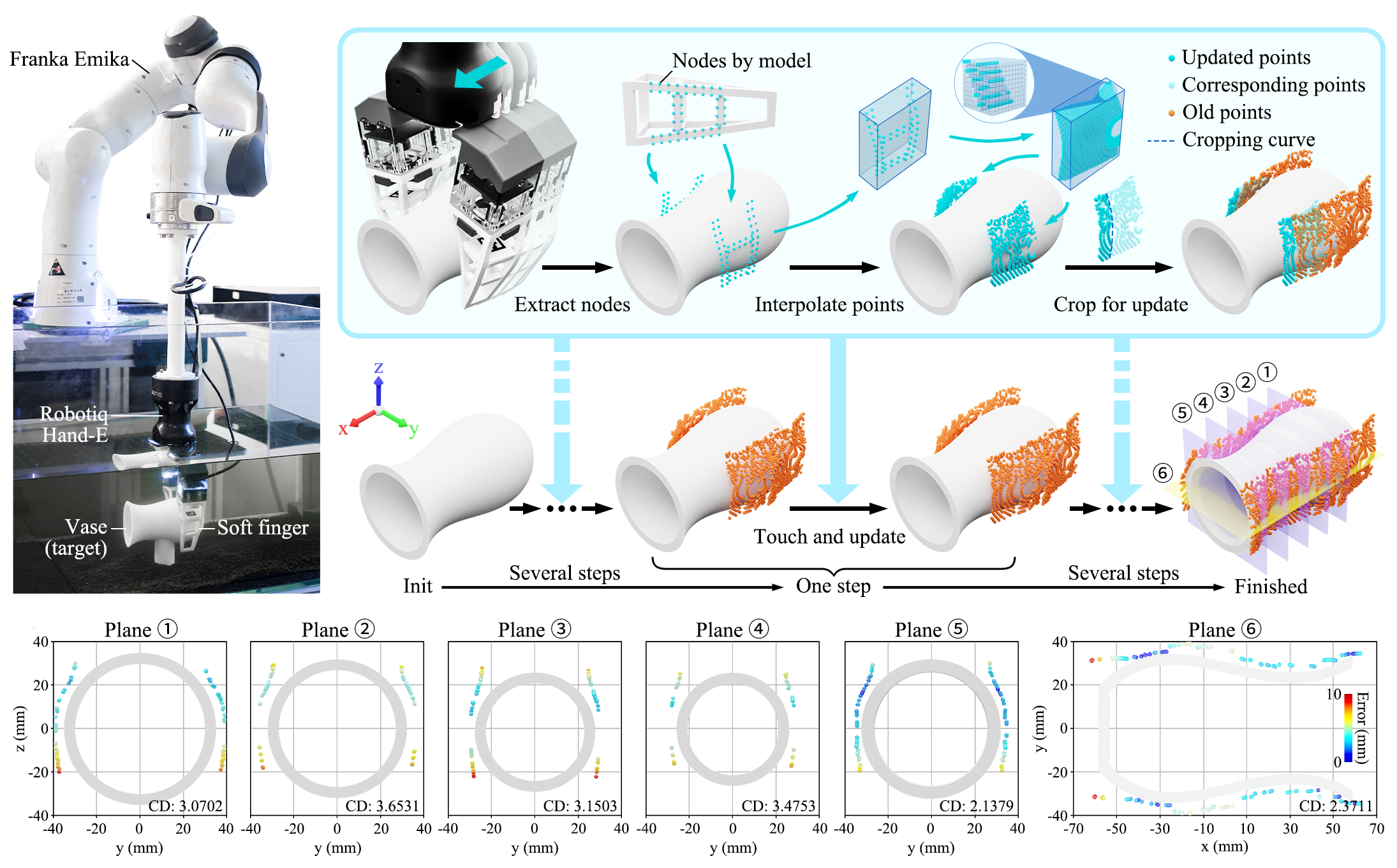

While proprioception refers to being aware of one's movement, tactile sensing involves gathering

information about the external environment through the sense of touch. This section presents an object

shape estimation approach by extending the PropSE method proposed to tactile sensing.

Since our soft finger can provide large-scale, adaptive deformation conforming to the object's geometric

features through contact, we could infer shape-related contact information from the finger's estimated

shape during the process. We assume the soft finger's contact patch coincides with that of the object

during grasping. As a result, we can predict object surface topography using spatially distributed contact

points on the touching interface. In this case, we used a parallel two-finger gripper (HandE from Robotiq,

Inc.) attached to the wrist flange of a robotic manipulator (Franka Emika) through a 3D-printed

cylindrical rod for an extended range of motion. Our soft robotic fingers are attached to each fingertip

of the gripper through a customized adapter fabricated by 3D printing. With the gripper submerged

underwater, the system is programmed to sequentially execute a series of actions, including gripping and

releasing the object and moving along a prescribed direction for a fixed distance to acquire underwater

object shape information.

We present our method on actual data collected during the underwater tactile exploration experiment. The

shape estimates at each cutting sectional plane are compared concerning the ground truth using the Chamfer

Distance (CD). We chose five vertical cutting planes and one horizontal sectional plane for reconstructed

object surface evaluation. For each cutting plane, a calibration error exists between the vase and the

Hand-E gripper, leading to the expected gap between the reconstructed and ground truth points. In addition

to the systematic error, we have observed a slight decrease in the CD metric values between planes 1 and 5

compared to planes 2, 3, and 4, which could be attributed to the limitations of the soft finger in

adapting to small objects with significant curvature. On the other hand, by employing tactile exploration

actions with a relatively large contact area on the soft finger's surface, the shape estimation of objects

similar in size to the vase can be accomplished more efficiently, typically within 8-12 touches.

Here, we provide a full-system demonstration using our vision-based soft robotic fingers on an underwater

Remotely Operated Vehicle (ROV, FIFISH PRO V6 PLUS, QYSEA). It includes a single-DOF robotic gripper,

which can be modified using the proposed soft fingers with customized adaptors. Our design conveniently

introduced omni-directional adaptation capability to the gripper's existing functionality with added

capabilities in real-time tactile sensing underwater. Using the in-finger images, we can use the methods

proposed in this work to achieve real-time reconstruction of contact events on our soft robotic finger.

Since our soft finger can provide large-scale, adaptive deformation conforming to the object's geometric

features through contact, we could infer shape-related contact information from the finger's estimated

shape during the process. We assume the soft finger's contact patch coincides with that of the object

during grasping. As a result, we can predict object surface topography using spatially distributed contact

points on the touching interface. In this case, we used a parallel two-finger gripper (HandE from Robotiq,

Inc.) attached to the wrist flange of a robotic manipulator (Franka Emika) through a 3D-printed

cylindrical rod for an extended range of motion. Our soft robotic fingers are attached to each fingertip

of the gripper through a customized adapter fabricated by 3D printing. With the gripper submerged

underwater, the system is programmed to sequentially execute a series of actions, including gripping and

releasing the object and moving along a prescribed direction for a fixed distance to acquire underwater

object shape information.